Exposed: Facebook Fails the Ethical Challenge of Transparency

Aidan White

Revelations in the Guardian about the rules and codes Facebook has developed to deal with violence, terrorism, hate-speech and a multitude of other forms of online abuse, will reinforce a much-needed global debate about ethics and standards.

What is astonishing about the Facebook Files story this week is that it is the first glimpse from inside this notoriously secretive company about how it manages information.

With almost two billion users, Facebook is already the first truly global media company. It has the power to shape the social, cultural and political landscape of the Western world and is already under intense scrutiny by governments in the United States and Europe.

And it is good business. The company has become eye-wateringly rich by using technology to sell the personal information of its users. In April this year it was valued at more than $350 billion.

Its business model promotes anything that generates clicks and interest, even if it is false or abusive. It gives no priority to information as a public good, such as journalism, and it is overwhelmed by information overload.

As one insider told the Guardian: “Facebook cannot keep control of its content. It has grown too big, too quickly.”

The insights provided in the latest investigation show that although Facebook is not a traditional media company, it must take responsibility for how its technology is used.

Governments, such as those in Britain and Germany, have already condemned the failure of social media companies for failing to take sufficient action to tackle dangerous content. Now they are ready to impose fines and legal controls to force Facebook and others to stop the flow of malicious fake news and extremist content.

Traditional publishers are also calling for action. The impoverishment of journalism and newsrooms by the draining away of advertising revenue by Google, Facebook and others has angered traditional media, not least because many believe these companies fail to acknowledge their publisher responsibilities.

The American National Newspaper Publishers Association has called for the network to be regulated and at the beginning of June the World Association of Newspapers meeting in South Africa will launch an international expert group involving around 150 media executives from 50 countries that aims to redefine relations between social media and the news industry. This expert group will launch with a report on how Facebook makes its money – a sore point for journalists and editors everywhere.

What this report and the story from The Guardian have in common is a focus on the culture of secrecy that surrounds Facebook and its work.

The company has a good line in well-meaning rhetoric and employs a number of bright sparks to defend its interests. Monika Bickert, the company’s Global Policy Chief, for instance, told The Guardian: “We feel responsible to our community to keep them safe and we feel very accountable. It’s absolutely our responsibility to keep on top of it. It’s a company commitment.”

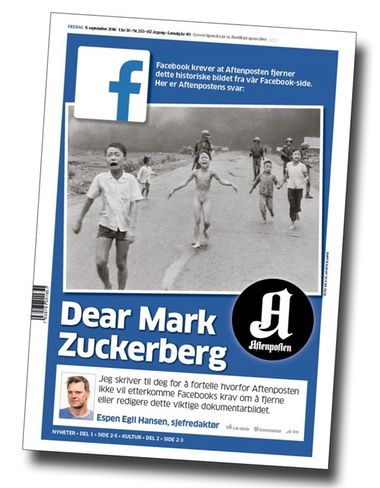

But the words sound hollow. Facebook rarely volunteers to changes its approach. It appears to respond only to appeals to take down dangerous content when the complaints come from either powerful advertisers or political leaders or when there is orchestrated media outrage, such as over last year’s censorship of the famous Vietnam war-era picture of Napalm-girl Phan Thi Kim Phuc.

Hitherto, the company has made changes only as a result of external pressure and even then those changes have been modest. As the Guardian reveals, videos of beheadings, a sexual assault on a child, and a man being stabbed were only removed when journalists asked about them – even though users had flagged the posts.

Central to the problem is that the company refuses to be transparent about the way it works. This latest report is a good example. Hundreds of millions of Facebook users have for the first time access to information on the company’s arrangements for monitoring and deleting content, but only thanks to insider leaks, the investigative journalism of a major news outlet and anonymous testimony of employees.

This is itself a shocking indicator of how far Facebook has yet to travel before it reaches any credible point of democratic accountability.

Its algorithms work in mysterious ways that are controversial, but the company refuses to explain how they work for business reasons, however this cannot be an excuse for its failure to be open about how it moderates information.

The moderators employed by Facebook face an impossible task to keep the site free of extremist content, whether it’s from terrorists or deranged individuals committing acts of murder and torture. Although Facebook is reacting and promises new artificial intelligence to try to remove unacceptable content more swiftly it does not employ enough men and women to help get this out-of-control website back on track.

It says it will employ 3,000 more moderators to top up the existing team of 4,000. That will still be nowhere near enough. As the EJN has already pointed out, that will only provide one moderator for each 250,000 users.

It may well be that numbers alone will not solve the problem, but Facebook needs to be aware that transparency is not an extravagance, it gets to the heart of media accountability and democracy.

The work of Facebook raises legitimate questions about how it treats content, how it intersects with democracy, and how it influences the global information landscape. The company promises to do more, but its lack of respect for transparency and ethical standards remain an obstacle to building trust.

Read the article in Spanish here: Facebook se queda corto ante el desafío ético de la transparencia

More on Facebook and media ethics: